Wednesday, August 5, 2015

[JL] VR Ocean

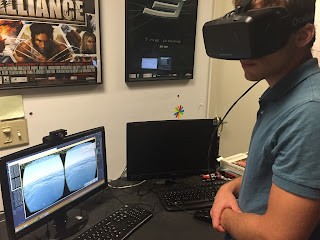

I now have a VR-ocean up and working with real time GPU ray tracing for Oculus Rift using G3D::VRApp. It's very soothing to watch the waves. Open source code and demo will be coming soon after code cleanup.

Tuesday, August 4, 2015

[MM] Victory, with < hour to go

Here we have it, shoddy fully body tracking with an extra glitch effect (an adaptation of https://www.shadertoy.com/view/4t23Rc, applied to things with an alpha channel)!

You can see the calibration isn't quite right when I lean over to shut off the video at the end. Definitely need an automatic calibration method, too hard to get right (spent way too long on it today) and too easy to hit out of whack.

My next steps are definitely:

- Integrate an automatic calibration method, for getting the relative transform between the oculus camera and the kinect.

- Wire up my 2nd kinect

- Try generating a mesh instead of a point cloud.

This was a super fun jam, and I know how I'd approach things differently if I had to do it all over.

A moving light source In a Dark Scene

Since my last post I have worked on a couple of small things to make the hand tracking and visualization a little neater. I also worked to set up the initial experience that led me to work with the leap motion in the first place.

For those that remember my original goal was to create a VR experience where the subject is placed in a dark environment and is able to control with their hands the only light source. Below is a video of what I have done so far.

In the original example the light source takes the form of a torch that is constantly on. In my experience I made it so that the light source the player controls is in the form of an orb that can be summoned by looking at your right while it is flat and facing upwards. If you tilt your hand or close your hand past a certain amount the light source disappears.

Williams College VRJam Site Pictures

[MMc] Body-space HUD layer working

|

| Iron Man-style 2.5D Body Space HUD |

[JL] Ocean Waves and Movement

I'm Jamie Lesser, a student at Williams College and a researcher in the Williams College Graphics Lab. I will be joining for the second half of the VR Jam and hope to explore sea motion. Is the visual component of sitting on a bobbing boat on the ocean enough to feel like you are really bobbing? I find this question especially interesting because it requires no movement from the user, and so should be convincing in most settings. The main drawback would be increased motion sickness for those who are sea sensitive.

[MMc] Overnight GUI improvements

I made some changes last night, working from my laptop without a DK2. This verifies that you can use VRApp without the physical hardware installed (my brand-new laptop with Apple's idea of a "fast" GPU can only render at 6 fps, so you wouldn't want a DK2 attached).

[MM] Self sabotage

Instead of hitting "publish" on the last blog post and going to sleep, I stayed up and manually aligned the camera (by flying it around and micro adjusting, nothing even smart), to get the first video of the generated avatar. Ugly as heck (even left a debug coordinate frame in...) but really cool in-person. I can't wait to improve it, but I really do need sleep to make this jam the best it can be.

Monday, August 3, 2015

[MM] Update, point clouds

Lots of hardware issues and some software ones mean this update isn't the most exciting, but it is obviously better than before! I am rendering a point cloud generated from one kinect (which wasn't the one I started with... hardware issues...) stably in world space. Here I did a programmer dance for you:

Next steps:

Next steps:

- Turn off the computer

- Instead of taking a break, do wire management and figure out a decent setup for capture in my apartment.

- Manually align oculus frame and head position in point cloud

- Profile and optimize so that we run at 75 Hz.

- Make the point cloud flicker in a cooler way.

- Claim Victory.

- Add automatic calibration between oculus and kinect

- Add a second kinect

- Add a third kinect

- Experiment with other rendering techniques.

[MMc] First successful HUD rendering in VR

Pressing the Tab key now moves the G3D GUI (everything in onGraphics2D) from the debugging mirror display into the VR world. It appears in front of the HMD, floating about 1.5m away from the viewer. The effect is surprisingly cool even with a boring debugging GUI when you're in the HMD--it is augmented reality for a virtual reality world.

[DE] Hacked Leap Motion + DK2

At the end of my last post I said I was going to start working with the leap motion controller in a vr environment. Well I have attached it to the front of a Oculus DK2 using masking tape and have begun working.

After a bit of confusion as to the relative frames of the leap motion controller and Oculus I was able to get get the controller sort of working. Below is a video showing me wiggle both hands in front of the oculus.

[MMc] First HUD layer success

The red rectangle in the center of the view is a 2D surface directly composited onto the HMD. The key bug was that I was positioning it behind the head before.

It appears that changing the HMD compositing quality may have been affecting the vertical axis direction the rendering rate. I'm not sure how this happened, but for the moment I disabled it to avoid the problem. Now, on to actually rendering a 2D GUI into that red square.

[MMc] Starting on GUI rendering layer

I created an ovrLayerType_QuadHeadLocked layer and initialized it. I'm just rendering it solid red for the moment. Such a layer has a 3D position. From looking at the Oculus samples, it appears that rotation is ignored on such a layer and the translation should have a negative Z value.

Right now the state is not good. Not only does the GUI layer not appear on screen correctly, about 30% of the time the 3D view flips upside down. It is extremely unpleasant when this happens if I'm wearing the HMD. I suspect that this may be an unintended consequence of my automatic effect disabling code based on frame rate.

The right eye also starts flashing cyan (the G3D default background color) every now and then. I think that both visual errors are separate from the GUI work that I've been doing.

[DE] First Leap Motion Results

Because of the extremely helpful instructions on the leap motion site, I have been able to make good progress. I have gotten the leap motion SDK hooked up to my project and have begun experimenting with the hand tracking. While eventually I would like to include a proper hand model, for now I have just been drawing out the joint positions. Below is a photo of my current representation of a hand. The blue spheres represent the joints in the hand, while the green sphere is the center of the palm.

[MM] Kinect/Oculus/G3D playing nicely together

After struggling with annoying include conflicts, redefinition errors, and linkers, we have success #1!

The kinect data is being streamed live to a VR enabled G3D app. Note that the color data is already properly aligned with the depth, thank to borrowing some Kinect code from my labmates who have done extension work on 3D scene reconstruction using the Kinect and other similar RGBD sensors, some of which required highly accurate color alignment (http://www.graphics.stanford.edu/~niessner/papers/2015/5shading/zollhoefer2015shading.pdf).

If you are very observant, you may notice I am not reaching the target framerate for 75Hz. This is worrying, but I won't care until I get a decent point cloud first. Back to work!

[MMc] New VRApp Feature Spec, Motion Sickness Notes

Here's my plan for the next few hours:

- Add documentation for VRApp::Settings

- Group initial values of VR options onto VRApp::Settings

- Add VRApp::Settings option to override motion blur

- Add VRApp::Settings option to mirror the undistorted views, instead of the distorted one

- Implement VRApp::DebugMirrorMode::PRE_DISTORTION

- Add VRApp::Settings option to have G3D auto-disable post-FX if your GPU isn't meeting the DK2 frame rate for a scene. The steps will be:

- Profile in onAfterSceneLoad

- Disable bloom

- Disable AO

- Downgrade antialiasing

- Disable antialiasing

- First quick-and-dirty version of the UI that will let you see the GUI but not interact with it

[DE] Leap Motion support

In the video the player is in a dark cavern with a single light source. The light source is a torch that is held out in front of player. He or she is able to illuminate different parts of the cavern by moving the torch around.

For this VR jam I want to create something similar to this demo. I want to have the player control the only light source. Furthermore I want the player to control this light source not with conventional inputs such as a controller or a mouse, but instead with their hands.

To do this, I will be working with the leap motion controller pictured below. This device is meant to track hands and other objects in real time and return 3D models of them.

[MM] Plan for Direct Body Placement in VR

I'm Michael Mara, graduate student at Stanford University under the advisement of Pat Hanrahan and researcher at NVIDIA. For the past week I had been waffling back and forth between a large amount of possible projects for the VR Jam. This is a partial list of what I seriously considered:

- AR by scanning room with kinect beforehand and importing model into VR scene.

- Music Visualization

- Big screen TV in VR

- Full virtual machine desktop in VR

- Godzilla stomping buildings (using kinect to track legs and a VR headset for display)

- Video Conferencing in VR (use a kinect or stereo camera to get color+depth, render a 3D floating head/full body with only moving head)

- VR Remote Control car/drone

- RTS game where you are explicitly a commander using VR to control troops (this plays into the limitations of current VR, but would require many jams worth of prereqs (screens in VR, gesture control))

- Soccer Header Game

- Short 3D film in VR movie theatre, with something completely coming out of the screen

- Getting a barbershop haircut

- VR Cards

- VR Ping Pong

- Cast magic spells

- Dodge bullets in the Matrix (Obviously would be better with bodytracking…)

Sunday, August 2, 2015

[MMc] Design for an in-HMD GUI

I'm Morgan McGuire, professor at Williams College and researcher at NVIDIA. For the game jam, I'm designing an adapter class that will allow using the G3D Innovation Engine GUI inside of the HMD view. I'll work on the G3D::VRApp class directly using Oculus DK2.

| Avatar depicts world-space 3D UIs |

Subscribe to:

Posts (Atom)